, 2 min read

Performance of Dalvik versus native C compilation

Android application programs are usually written in the Java programming language. Java source code is compiled to bytecode, which is then interpreted by the Java virtual machine. Current virtual machines (VM for short) use two tricks to improve performance:

- compile bytecode to machine code during run-time (JIT: just-in-time compilation)

- optimize machine code according usage pattern (hotspot technology)

Despite all these tricks native code produced by C/C++ compilers is ways faster than JIT/hotspot, see for example shootout (dead link) from 2008, or see The Computer Language Benchmarks Game (dead link).

For Intel/AMD x86 one can buy a "real" compiler for $3000: Excelsior JET (dead link), which is termed an "ahead-of-time" compiler, in contrast to the ordinary Java compiler, which just produces bytecode. For ARM there seems to be no ahead-of-time Java compiler.

On Android the Java virtual machine is named Dalvik.

An interesting article in Android Benchmarks shows that there is plenty of potential for performance improvement when going from Dalvik to plain C, i.e., in the range of factor almost 10, while the potential from Java 6 with Hotspot technology to plain C is in the range of being 25% slower, admittedly in some single cases, and this is "old" data, i.e., it is from around 2009.

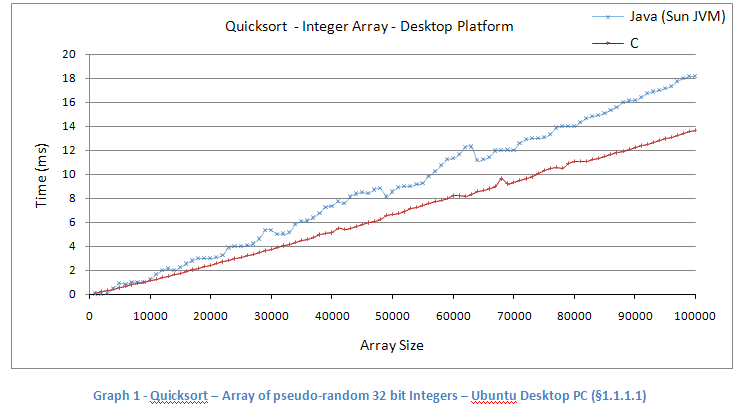

See the graphs from above article:

and

and

Google bought FlexyCore to improve their performance of Dalvik. With Android KitKat one has the option to switch to ART, the new runtime environment which improves performance of the execution of Java binaries.

See below picture in Dalvik vs. ART vs. C for a recent performance comparison between Dalvik versus ART versus plain C, still showing a gap of factor 3 between Dalvik and plain C: